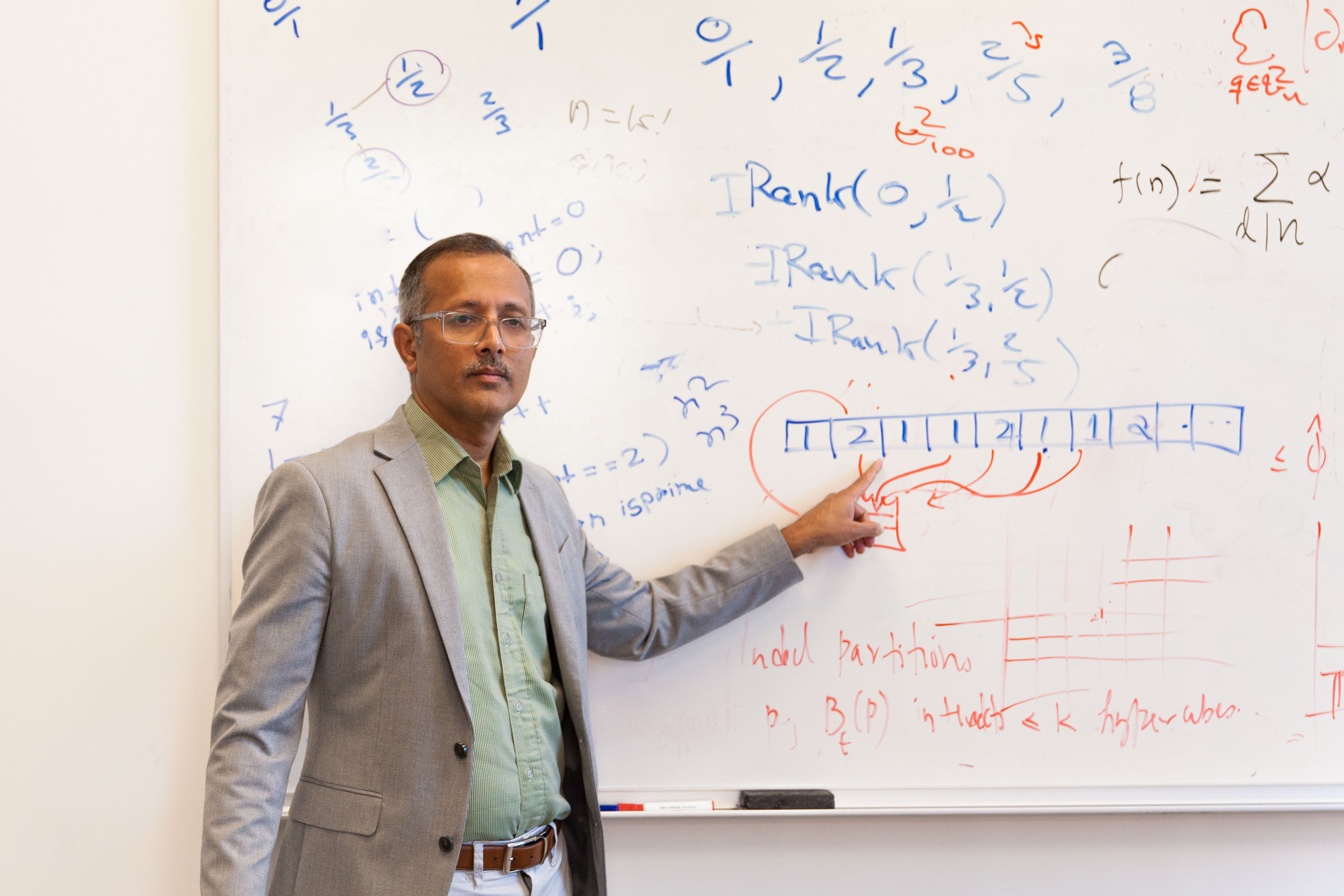

School of Computing Professor Vinodchandran Variyam was on sabbatical at the National University of Singapore and enjoying dinner with his colleagues when a discussion topic sparked an idea that could potentially change massive data analysis.

Variyam and his colleagues, Kuldeep Meel, an associate professor at NUS, and Sourav Chakraborty, a computer scientist at the Indian Statistical Institute Kolkata, were discussing a fundamental problem in large data analysis: the Distinct Elements Problem.

The Distinct Elements Problem is the algorithmic task of approximating the number of unique data elements in instances when it may be impractical to store or process the entire dataset. Its applications span many fields, including network traffic analysis, database management, marketing, bioinformatics, and text analysis. It could also be used for a variety of purposes, such as fraud detection, in which an efficient algorithm could quickly flag unusual but not immediately recognizable patterns that deviate from the expected or previous historical patterns.

Developing an algorithm to perform what may seem like a straightforward task has proven to be a significant challenge for computer scientists over the years, but they may now have a solution in a new algorithm.

“Earlier known algorithms all were ‘hashing based,’ and the quality of that algorithm depended on the quality of hash functions that algorithm chooses,” Variyam said. “The new algorithm only uses a sampling strategy, and quality analysis can be done using elementary techniques.”

The new algorithm developed by Variyam and his colleagues uses a sampling strategy that reduces memory requirements, making it highly scalable, which is essential in computing environments where large amounts of data often must be processed very quickly. Analysis can be done using basic probabilistic reasoning, which is also critical in algorithm development today.

“It is surprising that this simple algorithm had not been discovered earlier,” Variyam said. “It is not uncommon in science that simplicity is missed for several years.”

The authors presented their new algorithm at the 2022 European Symposium on Algorithms, a premier international conference on algorithm design. Since then, their discovery has been gaining recognition and praise from computer scientists across the field, including world-renowned computer scientist Donald Knuth, author of The Art of Computer Programming who is often referred to as "the father of the analysis of algorithms.”

In May of this year, Knuth published his own paper on the algorithm, “The CVM Algorithm for Estimating Distinct Elements in Streams,” offering extensive praise and even naming it the CVM algorithm in honor of its inventors. Knuth said in addition to “explaining the ideas to just about everybody [he] meets,” he expects the algorithm to become a foundational aspect of computer science in the near future.

"Their algorithm is not only interesting; it is extremely simple,” Knuth said in the paper. “It’s wonderfully suited to teaching students who are learning the basics of computer science. I’m pretty sure that something like this will eventually become a standard textbook topic.”

Many other computer scientists have already begun building on the concept, while the original inventors are continually fine-tuning it.

“We are constantly thinking about how this algorithm can be further improved,” Variyam said. “Another set of researchers is looking into additional properties, such as estimation bias of the algorithm.”

As Knuth predicted and Variyam hoped, the algorithm has already found a place in computer science courses.

“Many researchers have communicated to us that they will teach the algorithm in their course. Some already have,” Variyam said. “We believe that this will be a mainstream algorithm that is taught in the first computer science course on algorithms in general and probabilistic algorithm in particular.”