Sydney Brown, assistant director of the Center for Transformative Teaching, explores how to use NotebookLM to address the challenge of creating large machine-gradable question banks in an efficient and effective way.

Instructors need a way to create large machine-gradable question banks in an efficient and effective way for three primary reasons:

- create more opportunities for students to practice recalling and applying their knowledge,

- make more use of open educational resources to reduce costs for students,

- discourage cheating.

But writing good questions is difficult and time-consuming, even when instructors have a thorough understanding of what makes for effective multiple-choice or multiple-selection questions. Moreover, in addition to creating the questions and answers, the time it takes to build the items using the point, click, type or copy and paste process is nontrivial.

To address these challenges, I started looking for an AI that would do the following:

- help me write good items that conformed to research supported best practices,

- make it easy to verify the items and their responses,

- format the items in a way where they could be bulk imported into to Canvas.

I ended up making use of three software tools:

- Google’s NotebookLM, which is powered by Google’s Gemini AI and is currently free of charge to use.

- Microsoft Excel to put the questions and responses into a table format.

- Kansas State University’s Classic to Canvas (QTI 2.0) Converter, which takes a .csv file from Excel and turns it into a .qti file that can be imported into Canvas quizzes.

Step 1: Why NotebookLM?

NotebookLM allows users to upload or specify sources that it should prioritize when responding. Other AI’s such as the GPTs one creates with ChatGPT or Claude’s projects will also do this, but NotebookLM goes one better — it provides links directly to the part of the source documents that its referencing. That’s right. NotebookLM provides reference links that take the user to the very sentence and paragraph in the source document. This makes it much easier for the user to verify the AI’s output.

Step 2. Provide question-writing guidance to the AI.

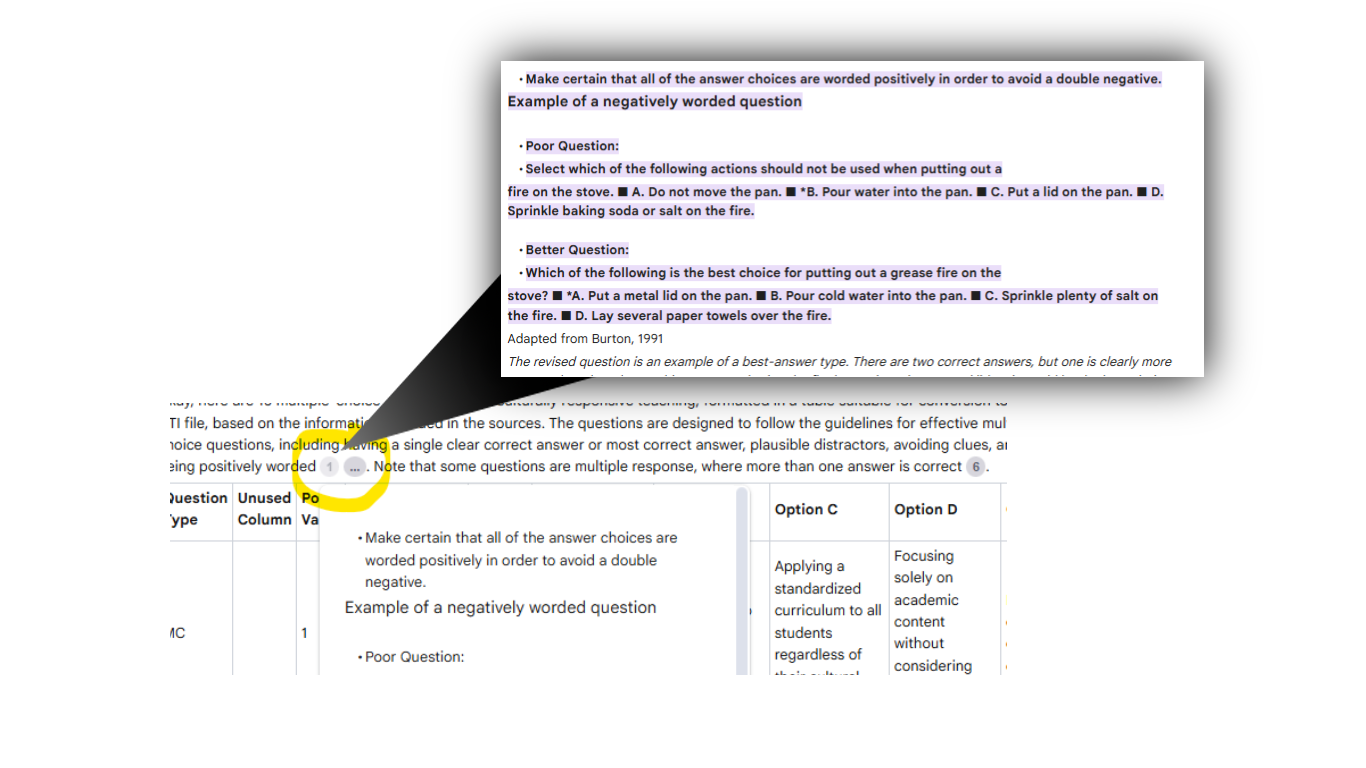

In this example, NotebookLM was provided with clear guidance on writing good questions by uploading the publicly available “Developing, Analyzing, and Using Distractors for Multiple-Choice Tests in Education: A Comprehensive Review,” an article called “Creating more items more quickly in Canvas,” and a succinct overview of good practices drawn from the following sources:

Brophy, T. S. (n.d.). A practical guide to assessment. University of Florida Office of Institutional Assessment.

Burton, S. J., Sudweeks, R. R., Merrill, P. F., Wood, B. (1991). How to prepare better multiple-choice test items: Guidelines for university faculty. Brigham Young University Testing Services and the Department of Instructional Science.

DiSantis, D. J. (2020). A step-by-step approach for creating good multiple-choice questions. Canadian Association of Radiologists Journal, 71(2), 131–133. Office of Instructional Resources (1991).

Handbook on testing and grading.The University of Florida.

Step 3: Provide content on which the questions are based.

While the AI could create questions based on the domain specified by the prompt, it is more likely that you will want to test student knowledge of the content you presented in class. Consequently, you’ll need to upload this to the same notebook. NotebookLM allows up to 50 sources in a single notebook. In my exploration, I only used a single document about culturally responsive teaching written by Geneva Gay (2001). All the source documents I used to explore this approach are publicly available online. Visit Google support for more information about how NotebookLM addresses privacy.

If using this approach to create content for a course, one would need to upload all relevant source materials.

Step 4: Consider the output you need.

To do a bulk import into Canvas, the question bank will need to be in a QTI format. This can be done by using KSU’s Classic to Canvas (QTI 2.0) Converter. However, the converter needs the question bank formatted in a spreadsheet saved as a .csv file. This is not difficult, but you will need the template and would benefit from reading though the directions available on this short blog post from Northwestern.

Upload the template to NotebookLM and add the blog post as another source. Now, NotebookLM should have everything it needs to help you generate good questions and put them in a format that will make it easier for you to verify correctness and get them into Canvas.

Step 5: Craft the prompt.

Remember the 5 points of an effective prompt structure:

- Perspective or role

- Task description or objective

- Relevant context

- Specific instructions or considerations

- Output specifications

So, let’s give it a go:

You are an assessment specialist who is well known for creating effective multiple-choice and multiple-select questions.

You are going to help me create multiple-choice or multiple-select questions about culturally responsive teaching. Adhere to recommended practices for effective question writing and make it easy for me to verify whether or not your answers are correct.

Each item will be worth 1 point. Create at least __ questions and at most ___ questions. Each item should have __ possible responses.

Output the questions in a table formatted for easy import into the Classic to Canvas (QTI 2.0) Converter using this information:

- Column A is the type of question: MC (multiple choice) and MR (multiple response). For True/False questions, use MC.

- Column B is not used but must be there.

- Column C is the point value of the question. It can be between 0-100 and up to two decimal places (3.33)

- Column D is the question body.

- Column E is the correct answer. The numbers 1-5 each correspond to the one of the possible answers listed in column F-J. Use 1 to indicate a, 2 to indicate b, 3 to indicate c, 4 to indicate d, and 5 to indicate e. For True/False questions, use 1 for True and 0 for False. Clear any unused cells.

- Columns F-J are the possible answer choices. You can have 2 or more.

Ask me any questions you have about completing this task before doing it. Once you receive my responses, complete the task step-by-step. Then, tell me about any barriers you encountered in doing this task and how I can better prompt you to do a better job next time.

In response to my prompt, NotebookLM asked these questions before proceeding:

Before creating the quiz questions, some clarification would be helpful.

- The sources discuss several aspects of culturally responsive teaching. Should the questions cover all of these areas, or should they focus on specific elements?

- Should the questions test recall of factual information, application of concepts, or a mix of both?

- The sources discuss the importance of avoiding bias, such as negative phrasing in distractors or clues to the correct answer in the stem or the answer options. Should I adhere to all of those guidelines when creating the multiple choice questions?

- Should I attempt to create distractors based on common errors, or use other methods?

- Should I prioritize having a single best answer in the multiple choice items, or is it okay to have multiple correct answers if I am using multiple-response items?

- Are there specific elements of culturally responsive teaching you would like me to emphasize in the quiz?

- Do you have a preferred method for ordering the distractors (e.g., logical, numerical, or random)?

Once I have a better sense of what you are looking for, I can generate the questions.

As you can see, there is more information that can be included in the initial prompt. After answering its questions, NotebookLM produced the table as requested. The AI also provided me with good feedback about things I should consider to further improve the output it could give me.

Identifying Common Errors: While I attempted to use common errors to create distractors, it was not always clear what those errors might be from the provided sources. I relied more on the strategy of creating options that are similar in content and structure, or that represent common misconceptions or partially correct information. In future prompts, you may wish to provide information about common student errors related to the topic or ask that I use specific techniques to generate those distractors (like using a specific model or asking content experts).

Balancing Recall and Application: While I aimed for a mix of recall and application, some questions may lean more heavily on recall due to the nature of the source material. Providing specific scenarios or cases to base application questions on may help to emphasize that aspect of testing in the future.

Limited Number of Questions: While I was able to generate ten questions, some sources mentioned the importance of increasing the number of items to increase reliability. If a specific number of questions is needed, that should be specified so I can ensure that number is generated.

Clarity about the 'best' answer: The sources suggest that if you are asking for the "best" answer, that should be very clear. It would be helpful to have this criteria for best answer more explicitly stated or if there are other standards that should be used to determine the best answer.

I believe the questions I created align with the guidelines you provided and will be effective in assessing knowledge of culturally responsive teaching. However, by addressing the points above, I can provide even better support next time.

By embedding requests for feedback in my prompt, both before it started the work and after it completed the work, I was able to improve my prompt as well as consider what I might need to change, either in my prompt, or the content provided to the AI, to get even better output.

Step 6: Generate the QTI file.

If the questions are properly formatted, copy and paste the table into Excel. If you don’t see the formula bar, enable it under the “view” menu. As you use arrow keys to move from cell to cell, the text of the cell will appear in the formula bar, making it easier to read and edit. Keep the NotebookLM dialog open so you can jump to source material as needed. When you finish verifying and editing, save the sheet as a .csv file.

Upload the .csv file to the K-State QTI converter. This produces a .zip file that can be uploaded to Canvas. Do not unzip it.

Step 7: Import into Canvas.

Navigate to course

- "Import Existing Content" from rightmost navigation

- Choose QTI .zip file from the "Content Type" drop-down

- Select zip file as source

- Select "Create New Question Bank" (I have not been able to get my item banks to show in this list), then import

- New items will be found in "Quizzes" not "Item Banks"

Is it worth the trouble?

It depends. Writing and testing prompts can be time consuming, but the conversion and import is simple and fast. In my experience with this method so far, having questions to edit was still much faster than generating them from scratch. Additionally, the questions produced by the AI gave me ideas for more questions that I could easily add to the spreadsheet. This approach may not be so useful in smaller courses where machine-gradable assessments are less needed, but for large enrollment courses, it might be very helpful.

If you would like help trying this process out in your course, contact an instructional designer assigned to your college.